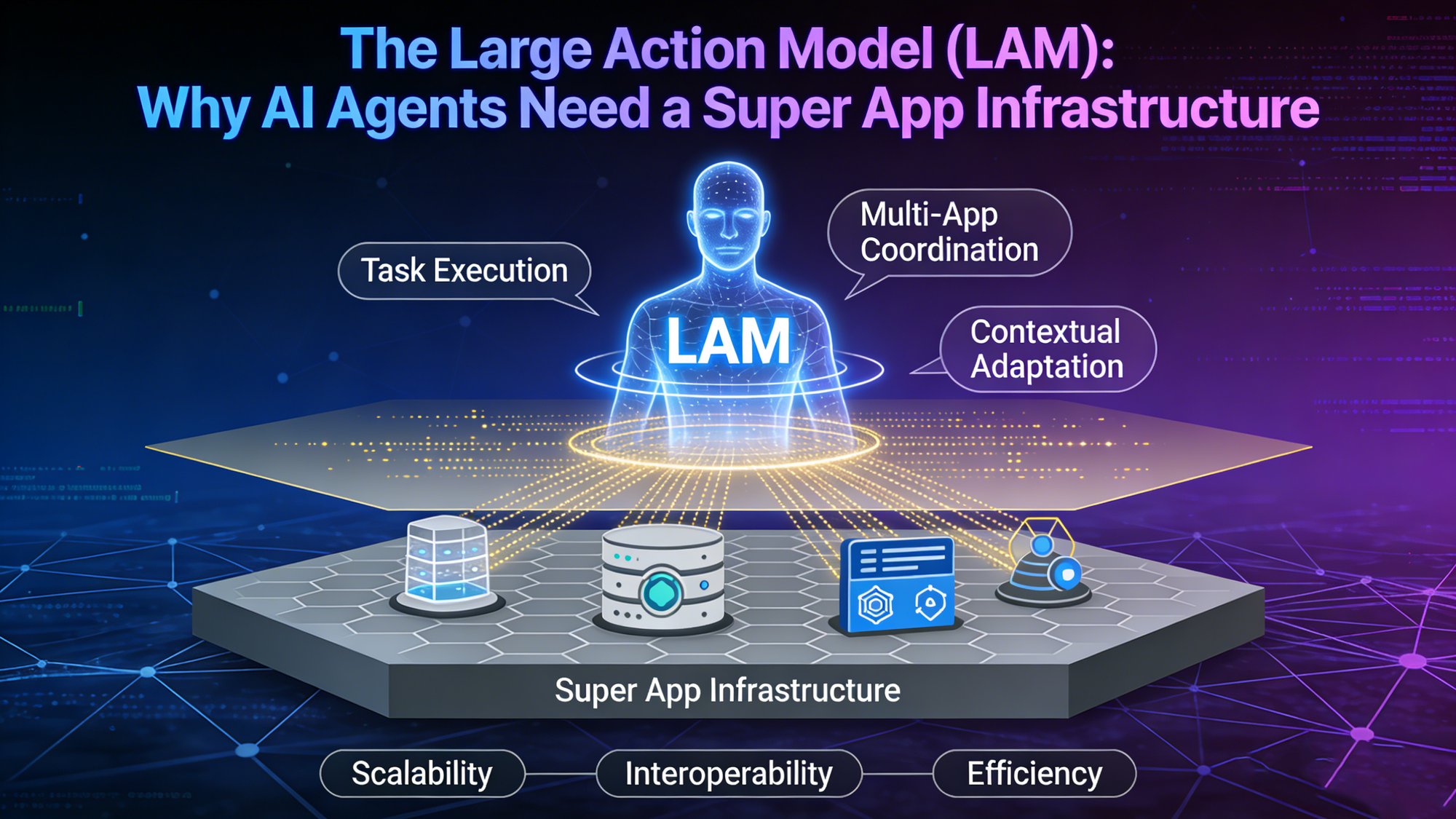

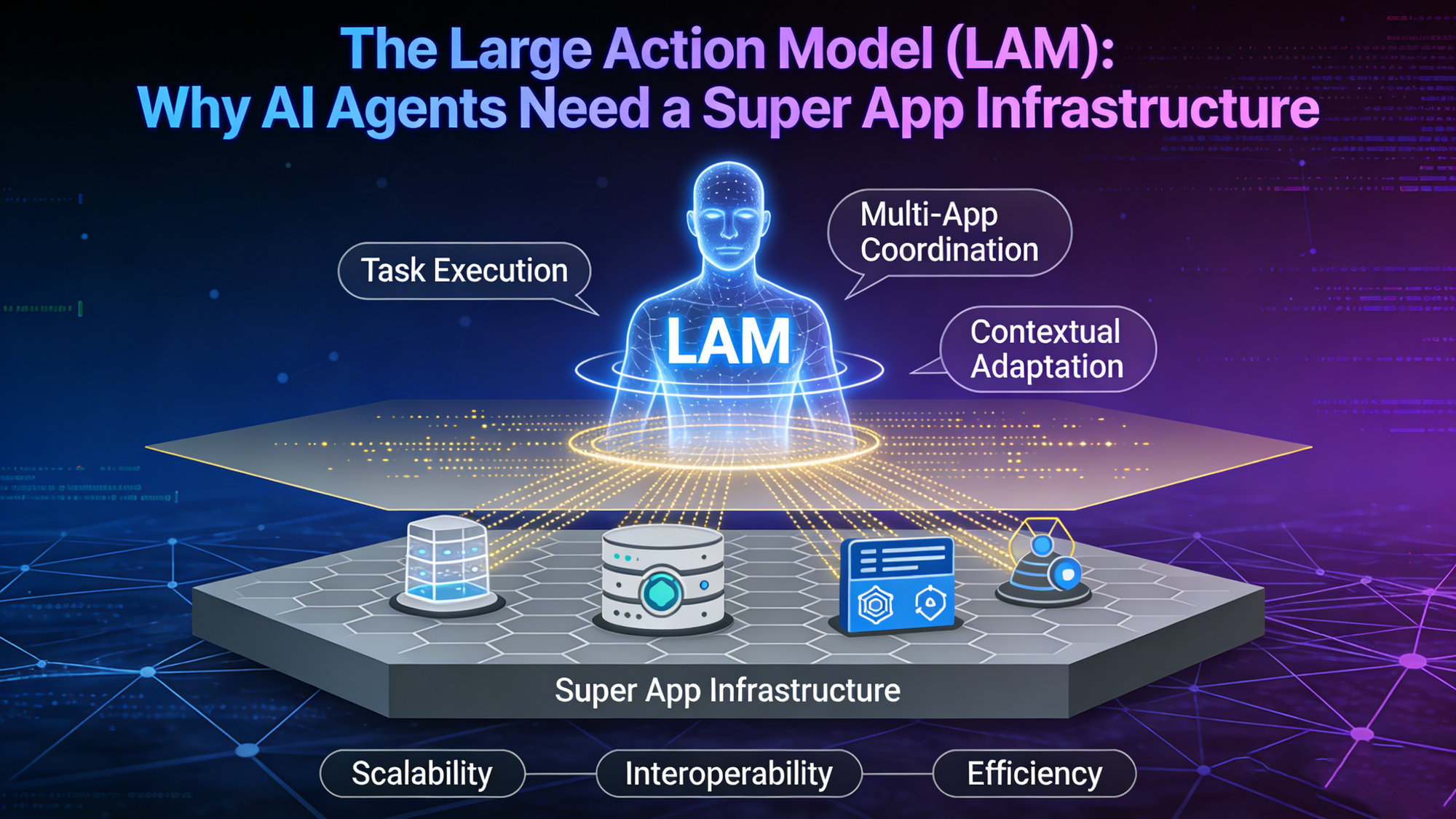

The Large Action Model (LAM): Why AI Agents Need a Super App Infrastructure

Introduction: From "Chat" to "Action"

In 2023, the world was mesmerized by Large Language Models (LLMs). We discovered that computers could talk, reason, and generate poetry.

In 2024, the novelty began to fade. We realized that while LLMs could write a poem about ordering a pizza, they couldn't actually order the pizza.

As we stand in 2026, the AI industry has shifted its focus from Language to Action. We are entering the era of the Large Action Model (LAM) and AI Agents.

The goal of an AI Agent is not to chat; it is to execute complex workflows. "Book a flight to London, find a hotel near the conference center, and expense it to my corporate card."

However, AI Agents face a massive physical barrier: The Interface Problem.

Most mobile applications are "Black Boxes" to an AI. They are compiled binary code (APKs/IPAs) designed for human thumbs, not digital brains. For AI Agents to fulfill their promise, they need a structured, standardized, and dynamic interface to interact with the world.

This article argues that the Super App Architecture, powered by FinClip Mini-Apps, provides the missing "Action Layer" for the age of AI.

1. The "Last Mile" Problem of AI

To understand why AI needs FinClip, we must first understand why current approaches fail.

The Problem with APIs

The traditional engineering approach is: "Let the AI call the API."

While powerful, this is unscalable. A "General Purpose" AI Agent cannot possibly integrate with the millions of proprietary REST APIs used by every local flower shop, dry cleaner, and parking garage. APIs change, break, and require complex authentication handling that is invisible to the user.

The Problem with Native Apps (Vision Processing)

Another approach, popularized by devices like the Rabbit r1, involves using Computer Vision to "look" at the smartphone screen and simulate human clicks.

This is fragile. If the Uber app moves a button 5 pixels to the right, the AI breaks. It is also slow (latency in taking screenshots) and insecure (giving an AI full control over your screen).

The Solution: A Structured Frontend

AI needs a middle ground. It needs a format that is:

- Human-Readable: So the user can verify what the AI is buying.

- Machine-Parsable: So the AI can understand the structure of the data and the action.

This is exactly what a Mini-Program is. It is a lightweight package of Logic (JS) and Structure (JSON/CSS) that defines a service.

2. FinClip as the "Hand" of the AI Agent

If the LLM is the "Brain," FinClip is the "Hand."

By adopting a Super App architecture, an enterprise creates a standardized library of actions that an AI can easily invoke. FinClip acts as the Deterministic Runtime for the probabilistic AI.

The "Tool Use" Paradigm

In modern AI development (e.g., OpenAI's Function Calling), models are trained to select "Tools."

In a FinClip ecosystem, every Mini-App is a Tool.

- Manifest Files as AI Instructions:

Every FinClip mini-app has a configuration file (app.json). This acts as the "Instruction Manual" for the AI Agent.- AI Analysis: "I see a mini-app named 'FlightBooking'. Its inputs are 'Destination', 'Date', and 'SeatType'."

- The Execution Flow:

- User Intent: "Book a flight to NYC."

- AI Agent: Queries the FinClip Store Index. Finds the com.airline.booking mini-app.

- Parameter Filling: The AI generates the startup parameters: { dest: 'JFK', date: '2026-02-01' }.

- Action: The AI instructs the FinClip Runtime to launch the mini-app with these parameters pre-filled.

The result? The mini-app pops up instantly, with the flight already selected, waiting for the user to hit "Pay." This is the perfect symbiotic relationship between Human control and AI automation.

3. Generative UI: The End of Static Screens

The convergence of FinClip and AI leads us to a radical new concept: Generative UI.

Today, app interfaces are hard-coded by designers. But user intents are infinite. Why should a user navigating a "Complex Insurance Claim" see the same static menu as a user "Just checking their deductible"?

With FinClip, the UI can be generated on the fly.

The JSON-to-UI Pipeline

FinClip mini-apps are rendered from code that can be represented as text (JSON/WXML). Since LLMs excel at generating text, they can generate UI.

The Workflow of 2027:

- User: "I need to file a claim for a car accident. Here is the photo of the damage."

- AI: Analyzes the photo. Determines the necessary data fields (Date, Location, Damage Type, Photo Upload).

- Generation: The AI constructs a specific FinClip Mini-App schema—a simple form containing only the relevant fields.

- Rendering: The FinClip Runtime renders this temporary, ephemeral mini-app instantly.

- Completion: The user fills it out. The mini-app submits the data and then dissolves.

The user never navigated a menu. They never downloaded a specific "Claims App." The interface was summoned into existence by their intent. FinClip is the engine that makes this "Just-in-Time App" possible.

4. The "Agentic" Super App

For enterprises, this shifts the definition of a Super App.

It is no longer just a "Mall" for humans; it is an Operating System for Agents.

Reducing Hallucinations

One of the biggest risks of AI is hallucination (making things up).

By constraining the AI to act only through certified FinClip Mini-Apps, enterprises create a safety layer.

- The AI cannot "invent" a bank transaction. It can only call the official "Transfer" mini-app.

- The FinClip Sandbox ensures that the AI cannot access data it shouldn't (e.g., reading the user's password field).

Private AI on the Edge

Privacy is paramount. Users do not want their banking data sent to a public AI model in the cloud.

FinClip’s architecture supports Hybrid Execution.

- A Small Language Model (SLM) runs locally on the user's device (embedded in the Super App).

- It processes the user's voice command locally.

- It triggers a local FinClip mini-app.

- No data leaves the device.

This "Edge AI + Edge Runtime" combination is the holy grail of privacy-first personal assistants.

Conclusion: The Convergence of Ecosystem and Intelligence

We are standing at the precipice of a UI revolution. The grid of icons that has defined the smartphone era for 20 years is about to be replaced by Intent-Driven Interfaces.

In this new world, the value of an app is defined by its interoperability with AI.

Monolithic, closed apps will be invisible to AI Agents. They will be left behind.

Super Apps, structured as ecosystems of accessible, standardized Mini-Apps, will become the preferred playground for AI.

FinClip is more than just a container technology; it is the Action Protocol for the AI era. It provides the structured, secure, and dynamic environment where digital brains can interact with digital services.

To build for the future, you must build a Super App. Not just for your users, but for the Agents that will serve them.